David Immerman is as a Consulting Analyst for the TMT Consulting team based in Boston, MA. Prior to S&P Market Intelligence, David ran competitive intelligence for a supply chain risk management software startup and provided thought leadership and market research for an industrial software provider. Previously, David was an industry analyst in 451 Research’s Internet of Things channel primarily covering the smart transportation and automotive technology markets.

When using a product like a smartphone we do not often consider the hardware and software components operating underneath. Yet each time we access powerful smartphone applications a technical symphony of ‘zeros and ones’ are working in harmony. This ability for this symphony of applications to operate seamlessly at runtime is a competitive requirement for many.

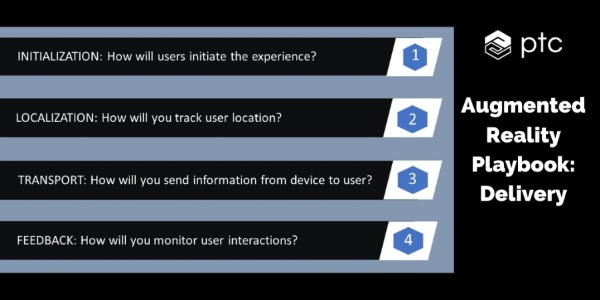

As more businesses pursue the creation of augmented reality applications, a key consideration is how the application will be delivered to users. An immersive and effective experience must avoid runtime malfunctions.

Businesses looking to develop an augmented reality delivery strategy need to ask themselves four key questions:

- How will users initiate the AR experience?

- How will you track user location?

- How will you send information from device to user?

- How will you monitor user interactions?

Below we begin to explore these crucial questions; however, for a more in-depth explanation and training, AR thought leader and PTC CEO Jim Heppelmann has created a Massive Open Online Course (MOOC) – How to Build an Augmented Reality Strategy for Your Business.

To take a step back, it will be useful to read our first and second installments based on the MOOC: Jump-Start an Augmented Reality Strategy with These 6 Questions and 5 Content Considerations for your AR Strategy.

Question 1: How will users initiate the experience?

Options: Hard-coded, Menu Pick, Scan Maker, Near-field Wireless, Sensor Fusion

Initially determining how the AR experience will be activated or ‘initialize’ is a foundational AR delivery step.

- Hard-coded applications have the content built into it in a one-for-one approach. This is great for demos but presents future scaling challenges by creating an unmanageable number of purpose-built apps.

- Whereas with a general purpose application the developer could more seamlessly layer on different forms of content in different formats, such as Menu Pick.

- A unique marker (QR code, bar code) could generate the content from this general-purpose application, as could emerging techniques like Near-field wireless connectivity using the AR devices’ specific proximity.

- With Sensor Fusion a few different sources of data (user, GPS, asset health etc.) can be indicators to initiate the experience. For example, a service technician approaching a malfunctioning machine is delivered timely and accurate service instructions.

Question 2: How will you track user location?

Options: Manual, GPS, Beacons, Image Recognition, Object Recognition, Terrain Recognition, Sensor Fusion, AI

There are a variety of application delivery methods leveraging a user’s location (localization) within his/her surrounding environment. When deciding which method to use for the AR application primarily boils down to whether the use case is spatial or object-based in nature.

- GPS is a useful location reference point in outdoor environments while beacons are more promising in indoor situations (i.e. retail applications).

- Recognizing an image (QR/bar code), object (machine), and terrain (a place) through computer vision can be used as location points to generate AR experiences.

- A combination of these techniques, such as a GPS triangulating the user’s coordinates and object recognition identifying an exact machine, can be embedded with sensor fusion for even more accurate localization.

Question 3: How will you send information from device to user?

Options: Cached, Downloaded, Streaming

Technical questions around the application’s data are critical for the ‘transport’ question. The data volume and frequency the experience will require are key when contemplating these three options and getting the user the right information at the right time.

- Cached is building content into the application, which requires derivative rework to update the app every time you want to add new content.

- Downloading content on-the-fly into a general purpose application better maximizes compute resources as only information required by the user is downloaded at the right time. This is popular when there is a lot of potential data that could be delivered, but most of it is only needed periodically.

- Some AR experiences must accommodate for changing data, such as in IoT where the user is looking at a live machine’s feed. This is where streaming is most appropriate. IoT + AR use cases are poised to benefit different value areas including enhancing human capabilities (like remote support and training) and managing equipment (maintenance instructions and guidance) and spaces (inventory monitoring, spatial optimization).

Question 4: How will you monitor user interactions?

Options: None, Mobile Touchscreen, Voice, Gesture, Virtual UI/Screen, Eye Tracking, Location, IoT Sensors

While the AR application delivers an array of digital information to the user, in many instances inputs from the user can inform interactions within the experience. There are several ways to enable these two-way interactions:

- Mobile touchscreens for smartphones and tablets provide user inputs and feedback with the AR application.

- Voice, Gesture, and Virtual UIs are emerging hands-free interface mechanisms leveraging the AR’s native sensors for interactive experiences.

- Eye tracking, available in AR headwear like HoloLens 2 is used as a visual input to tell the user what they are looking at.

- With a user’s Location as an input they could walk by different areas in a factory and experience many different proximity-based applications. IoT sensors such as cameras will also be increasingly used.

Final Thoughts

Ensuring that the technical infrastructure is set to deliver the application seamlessly at runtime is critical to positive user experiences and workforce productivity. These foundational delivery considerations are the final chapter in Heppelmann’s AR Playbook (see blogs on augmented reality strategy and content).

Download the full 90-minute AR MOOC for more detailed considerations.